Developing applications for Microsoft Surface isn’t that hard

When you talk about Surface developing, you are talking about something really different. It’s not your everyday GUI, it’s something different and Microsoft defined this as NUI (Natural user interface). NUI defines four aspects:

- Direct interaction (with your finger, tagged objects)

- Multi-touch interface

- Multi-user interface

- Object recognition by tag

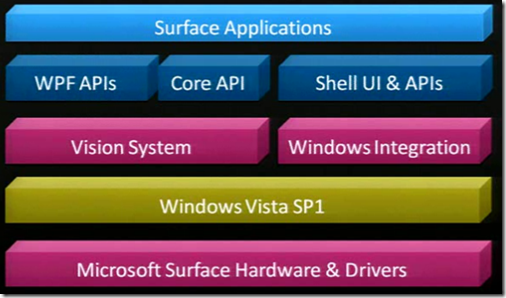

let’s look at the Surface architecture

The Surface architecture

Everything is built on the Microsoft Surface hardware with the specific drivers. On top of that is Windows Vista SP1.

Because Surface is not a regular PC you can’t do all of the dialoging etc that Vista does. For this you have Windows integration. This makes sure nothing is showed on the Surface that the Surface UI can’t handle. With this there is also a custom Shell and some API’s.

To work with tagged items and your finger, you need something that can read this. The five camera’s in the Surface are called the Vision System. You have five camera’s that can look at every angle and define a specific orientation. This data get’s reported to the SDK’s. You have the core API for the regular .NET interaction. For they nice eye candy you have the WPF API. More information about the WPF API can be found following in this post.

WPF API

The WPF API provides you with the capabilities to respond to touch.

Next to that it provides several toolset:

- standard WPF tools (button, list, ..)

- custom controls for surface specific UX (User Experience)

- Base classed for building your own surface controls

When you would like to create a control, you have to think in a whole different way.

For instance when you want to respond to a MouseDown event, you would normally read the MouseEventArgs.

In Surface you don’t have a mouse, you have a contact (from your finger f.e). So instead of MouseDown you have

ContactDown and instead of MouseEventArgs, you have ContactEventArgs.

You also have regular items in the API. Like a menu, buttons, checkboxes..but all with a slightly different behavior.

For instance you can open multiple menu’s because the Surface enables multi-touch. A click on the checkbox only happens when everyone removed their finger from the Surface. There are also text controls in the API. Like a textbox or something. When you click on a textcontrol a virtual keyboard will be displayed where you can enter your content. This virtual keyboard is by the way also modifiable.

As you can see developing for Surface isn’t all that hard. As long as you have the correct framework. So if you had already some experience in WPF, creating app’s for Surface is easy. The only thing you have to do is add a reference to the surface API and add the right tags in XAML. For instance a <Window> becomes <SurfaceWindow>, a <button> becomes a <SurfaceButton>.

Scatter view control

Scatter view control enables you to quickly enable 360° view of the object that are on the surface. Why would you want scatterview, well when you have multiple objects on the Surface screen and multiple people sitting around the surface. The chance that someone will see something upside down it fairly big. Let’s put this into practice.. For instance you want to create a photo app that displays photo’s. You can create a photo viewing application for the surface with only one line of code and some XAML. You basically add the scatter view control to the XAML and in the code behind you do the necessary data binding. And that’s it!

Recognizing items placed on the Surface.

This is also a big feature of the surface. It can recognize items that are provided with a custom tag.

You have two types of tags:

- Contact.Tag.Byte: which provides you with 256 unique values

- Contact.Tag.Identity: which provides you a very big number of unique values. This can be used to identify anything you can imagine.

Now how do we know how we can identify items, what can happen with these items?

Well you can have some kind of animation around the item and you would also need to update your app’s’ UI when you move or

remove the item from the Surface. For all this is a control called TagVisualizer.

TagVisualizer control

What would you have to do to work with the TagVisualizer. You would need to setup some things.

- You would first need to create the UI that’s going to be showed around, under, next the placed object

- Specify the tag that you are looking for

- Position the UI relative to the tag

Some properties that you can set

- Define the max # of objects allowed

- What to do when placed object is removed

If you are wondering how these tags work. They basically have multiple points on a piece of material that reflect to infrared light. The material isn’t that specific, anything that will reflect IR will do. The surface team is currently also working on a tool that you can use to print the tags.

Windows 7 and the Surface.

You may have heard Windows 7 is also going to provide multi-touch support. This means that all of the functionality that surface has should also be possible in Windows 7. Some more good news regarding the framework. Microsoft is going to implement all the Surface specific controls into WPF 4.0. This means you’ll have instant access to the Scatterview, the TagVisualizer etc..

If you are interested in this I encourage you to watch the following video from PDC2008: Developing for Microsoft Surface.

Another interesting link is probably the community site: community.surface.com. Too bad this is by invitation only… ![]()